Cyber winds ༄༄

Cybersecurity and infrastructure software trends, supported by 33N ventures

As Generative AI continues to accelerate in maturity and adoption, key security challenges are still being thought through by enterprise CISOs. What are the key risks? Where are CISOs starting and what are key learnings from the journey so far? How is AI security being prioritised? What are the key pieces of the stack being adopted? At 33N, we recently discussed the topic at our CISO circle, looking to collaboratively shed some light on a few of these questions. Let's dive in!

Adoption trends

To put everyone on the same page, despite its potential, the adoption of GenAI across organizations remains relatively modest, with only 9% of enterprises integrating it comprehensively within their operations.

Code co-pilot is the top use case driving the IT department to be the front-runner in GenAI adoption. While the application of GenAI in co-pilot security is frequent, CISOs recognize it comes with notable concerns (mostly yet unsolved).

In marketing, sales, and customer support, key use cases include content creation, knowledge search, summarization, and recommendations. While content creation raises lower concerns of data leakage or hallucinations, others not so much.

The cautious approach to widespread adoption highlights the need for robust data management and cybersecurity frameworks to mitigate associated risks. Enterprises have increased investment in these as building blocks for Gen AI adoption.

Vulnerabilities in ML

Past implementation of machine learning (ML) has introduced several types of attacks, including inference attacks, poisoning training data, data leakage and model stealing. These vulnerabilities underscore the critical nature of securing ML models, as failures can lead to catastrophic consequences. Unlike implementation issues, the problems in ML are deeply rooted in mathematical foundations, making them particularly challenging to address.

Adversarial ML is especially concerning due to the near-infinite and unpredictable adversarial space. Attacks on one model often transfer to others, complicating the security landscape. This lack of predictability and the absence of an easy fix further accentuate the necessity for advanced security measures.

Vulnerabilities in LLMs

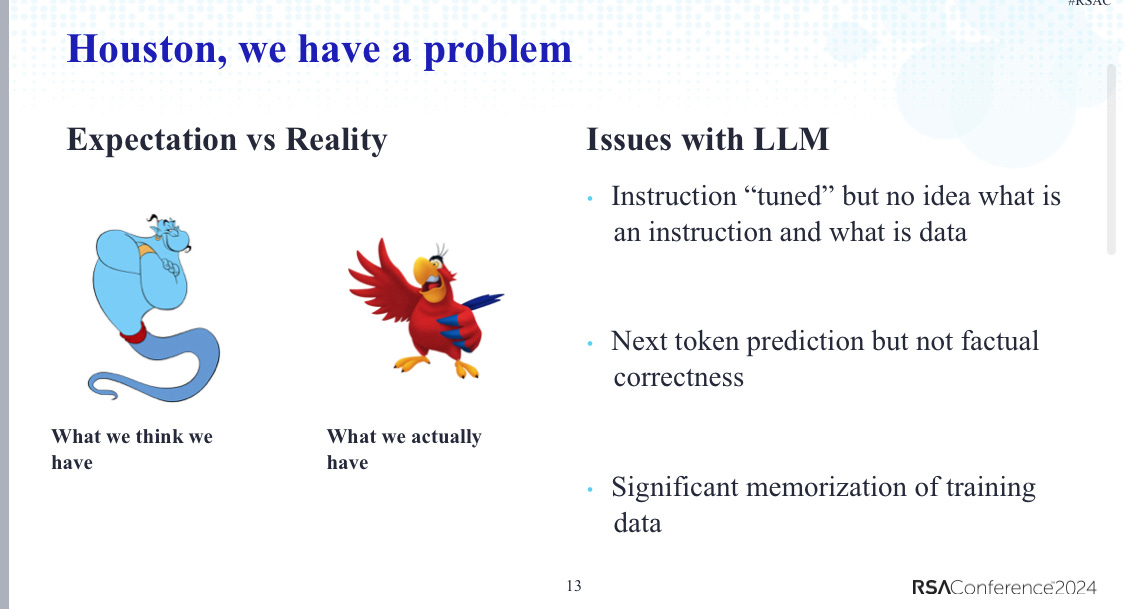

Large Language Models present a different set of challenges.

First, these models process sequential inputs where English words are broken into tokens, and predict the next token based on previous ones. Trained on vast amounts of language and code data, LLMs build a conditional probability of the next token. In short, these models function less as a 'genie co-worker' but more like a 'parrot', presenting an intrinsic risk for its users.

Then every stage of the LLM process is susceptible to attacks. These include poisoning training data, stealing training data, confusing the model to follow malicious instructions, and forcing the model to produce adversarial outputs or hallucinate. Furthermore, the model's 'constitution' can be compromised, exacerbating the security challenges.

Securing Models: The Complex Task

Securing AI models is a complex endeavor with no 'silver bullet' solution. From the get-go, security is different for different models and configurations (eg. one might set up high temperature for a content creation use case versus low temperature for enterprise knowledge search use case).

Then different approaches have also different limitations:

Red-teaming, while essential, cannot cover all potential attack paths,

Safety fine-tuning and Reinforcement Learning from Human Feedback (RLHF) doesn't cover all options as models have a probabilistic behavior and can lead to regression in other model behaviors,

Dual-LLMs or guard LLMs require optimizing security across multiple models,

Retrieval-Augmented Generation (RAG) can enhance fine-tuning and security measures. However, RAGs are not foolproof, with vulnerabilities in access controls, data poisoning in vector databases, insecure embeddings, and prompt injections that can steal context.

Early Building Blocks in GenAI Security

From collective insights shared by CISOs and experts, a common set of solutions is being implemented as a first approach:

1. Securing access to Data: Implementing Role-Based Access Control (RBAC), Data Loss Prevention (DLP) and/or vector databases that support Access Control Lists (ACL) enable to manage data securely and mitigate risks around data leakage,

2. Retrieval augmented generation (RAG): Used primarily for fine-tuning, RAGs provide some security enhancements,

3. AI Firewalls: Preventing data leakage on both input and output, as well as enabling tracking/monitoring of usage patterns.

Despite these measures, several CISOs reckon their practices are not yet mature enough to allocate sufficient budget for comprehensive AI security.

To their advantage, while several anecdotal examples of attacks have garnered attention over the last few years (eg. Chevrolet dealership chatbot that was played around back in Dec23), current attacks are mostly due to misconfigurations rather than sophisticated cyber threats.

As the industry matures, key regulations help enterprises to guide their initial approaches. Regulatory frameworks and guidelines such as MITRE ATLAS, ISO 42001 and FS-ISAC's Guidance on AI Risks have been commonly referred in shaping AI security practices.

In conclusion, while the adoption of GenAI offers immense potential, it comes with significant security challenges that require a multifaceted and proactive approach. Organisations must invest in robust security frameworks, continuous monitoring, and adaptive strategies to safeguard their AI systems against evolving threats.

If you are building or assessing solutions in ML/GenAI security, please don't hesitate to reach out - we'd be glad to exchange perspectives.